Liveness Detection in Facial Biometric Systems: a state of the art

Biometrics is the science and technology of measuring and analysing biological data. In information technology, biometrics refers to technologies that measure and analyse human body characteristics, such as DNA, fingerprints, eye retinas and irises, voice patterns, facial patterns and hand measurements, for authentication purposes. [1]

Of the multitude of biometric applications, facial recognition is emerging as the most successful, and it is now the second most widely-used technology after fingerprinting. [2] It is a reliable means of both identification and authentication, and it has a high user acceptability rate due to its non-intrusive nature.

Pioneering efforts in the deployment of automated facial recognition systems date back to the very beginning of the 21st century, with installations at the Super Bowl stadium in Tampa, Florida, Logan Airport in Boston, Palm Beach International Airport in Florida and Sydney Airport. Although the efficiency of these systems was not satisfactory and they had to be dismantled shortly after their installation, the results of the experiments were encouraging. The International Civil Aviation Organization (ICAO) has identified face recognition as the best-suited biometrics for airport and border control applications. [3]

There are several different ways in which face detection and recognition algorithms are developed, but these can generally be divided into two categories: holistic and local. The holistic approach considers the entire face image for the recognition process, e.g., the principal component analysis (PCA) method, or Eigenface, and the linear discriminant analysis (LDA) method, or Fisherface. [4] This is a widely adopted approach; however, the recognition accuracy suffers from scaling, rotation and translation of the input signal. The second group of feature extraction techniques is made up of algorithms that divide a facial image into several regions, and for each region, features are extracted and compared independently of the other regions (while comparison scores are combined via a fusion rule at the end). Local features suffer less than their holistic alternative from incorrect geometrical normalization of the input signal, which results in general better performance, particularly in the tests involving automatically localized faces. [5]

Like all biometrics, face recognition systems have to deal with some challenges. Here are the main ones:

- illumination changes

- head pose

- facial expressions

- variations in facial appearance (glasses, make-up, etc.)

Furthermore, and of particular importance to this study although in a different category, a significant drawback can be added to the previous list. Proportionate with its high user acceptance rate, there is the problem of the relative simplicity with which replay attacks can be delivered to facial biometric systems.

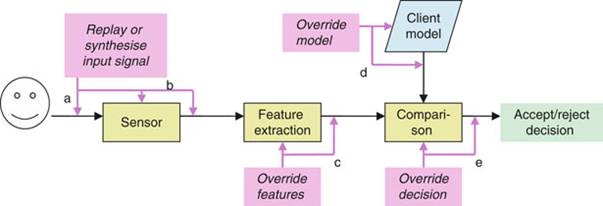

Like most biometric systems, these attacks can be delivered at several points, as shown in Fig. 1. [6] Starting from the least to the most likely intrusion point, as shown in Fig. 1e, compromising the Comparison Module of the system would allow the attacker to overwrite the threshold (accept/reject) score and thus be granted access with any face presented to the camera. Gaining access to the stored client models of the system, Fig. 1d, translates into identity theft. The attacker would replace the model of a real client with his own and thus be falsely accepted by the system as an authorised client. The Feature Extraction stage, as shown in Fig. 1 represents the point from which attacks completely bypass the input of the facial image by presenting fake features of a client’s face to the system. If access to the inside of the camera or to the connection between the camera and the rest of the system is obtained, as shown in Fig. 1b, the attacker will not be required to exhibit a “face” to the camera anymore; he can directly inject into the system a suitable stream of data that corresponds to the face image of an authorised user.

However, the part of the entire facial biometric verification system that is most vulnerable and susceptible to attacks is found in the Sensor module. This chapter will now turn its focus onto some of the different ways the system can be attacked from the sensor and the respective ways in which these can be prevented.

Figure 1 Potential points of attack in a face verification system

In a fully-automated system, in the attempt to impersonate an authorised user, an attacker has the opportunity to present a high quality photograph of that user to the sensor and be falsely accepted. Although there should be no scenarios in which only one security measure is employed (face recognition in this case), the authentication/identification paradigm, with the use of biometrics, should have shifted from “something you possess” to a combination of “something you possess” and “something you are”. However, with wide availability and easy access to high resolution photo cameras and the Internet, a facial image of the person chosen to impersonate is never difficult to obtain, thus rendering null the benefit of the biometrics. To make things even worse, nowadays one does not even have the inconvenience of printing an image, as it can be simply be displayed on a laptop or a tablet computer screen. [7]

Liveness detection: different approaches

Several methods have been suggested to protect face biometric systems from replay attacks. In an uncontrolled environment, possibly the only way to prevent this type of attack is by implementing an appropriate liveness detection mechanism, which decides whether the captured feature comes from a live source.

Using multiple experts, such as voice recognition together with lip movement synchronisation, can greatly improve the false acceptance rate (FAR) of a face verification system [8, 9]. Furthermore, systems that employ thermal, infrared or 3D sensors have little difficulty in distinguishing a human face from a picture, LCD, or even a mask. However, in most systems, hardware changes (such as the addition of a voice sensor) are not possible, and hardware upgrades (to systems that use more sophisticated video sensors) are not feasible.

Therefore, the real challenge is to make the most basic systems liveness-aware, systems which use normal, everyday video cameras and no other biometrical input.

Thus, one of the more popular liveness detection techniques is eye blinking detection. [10–12] It is an elegant solution to the liveness detection problem, it easily integrates into existing products, there is no need to acquire additional hardware, and no user interaction is required. Although the mechanisms work, this does not completely solve the spoofing problem. To begin with, humans do not necessarily blink very often, once every four to six seconds, but it is not uncommon for intervals to take 15 seconds or more. [13] Thus, in scenarios where user processing time has a high priority (e.g., airports), a system requiring some collaboration from the user might be favoured in order to save time.

However, there are scenarios where it is reasonable to spend a few extra seconds to be authenticated, such as high-security facilities, and it is here where we see the more stringent problem of using eye blinking as liveness detection: the impostor can easily cut out the eye portion of a photograph and while presenting it to the sensor, stay behind it and align his eyes with the photograph.

Another interesting technique that does not require any additional hardware is analysing the trajectories of single parts of a live face. These reveal valuable information to discriminate it against a spoofed one. The system proposed by Kollreider [14] uses a lightweight novel optical flow with a model-based local Gabor decomposition and SVM experts for face part detection. Test results are very promising and they show a very good false rejection rate (FRR) and FAR; however, a video of the subject is still reasonably easy to obtain, so this type of solution on its own is not sufficient.

To tackle the problem of a video on a tablet fed to the sensor, a solution that takes into consideration not only the face but also the background has been proposed. [15] The background from a video of the subject at a ceremony will certainly be different from that at an airport gate entrance. Although at the time of writing this is the latest published research on liveness detection implementation, without changing the original hardware of the system, it is safe to assume that careful video editing is still a possible threat to a system that implements this anti-spoofing technique.

Therefore, what this paper will try to do is propose a new approach to tackle the spoofing issue in face biometrics while taking into consideration the liveness detection methods available today. It proposes a method that bases its liveness evaluation on the physical/chemical characteristics of a human face as opposed to those of a photograph or a computer monitor.

A test has been run on two pieces of face recognition software: Blink! and KeyLemon. These programs are promoted as substitutes for passwords in the Windows operating system authentication process. The test consisted of the enrolment of a user and a test of the authentication process. In the authentication phase, a low-quality photograph of the enrolled user was presented to the sensor (webcam) on the low resolution display of a regular smartphone. Both programs failed the spoofing test as the “intruder” was granted access within seconds, effortlessly managing to gain access to the system by using everyday hardware and a publicly available image of the enrolled user.

In the tested scenario, the biometric implementation into the computer system not only fails to improve the security of the system, but it actually decreases it by introducing an unacceptable single point of failure.

References

[1] http://searchsecurity.techtarget.com/sDefinition/0,,sid14_gci211666,00.html. Definition of biometrics.

[2] G. Wei and D. Li, “Biometrics: Applications, Challenges and the Future”, pp. 135–149, 2006.

[3] http://www.policylaundering.org/keyplayers/ICAO-activities.html. ICAO activities.

[4] B. Moghaddam and A. Pentland, “Probabilistic visual learning for object representation”, Pattern Analysis and Machine Intelligence, IEEE Transactions, vol. 19, pp. 696–710, 1997.

[5] K. Kryszczuk and A. Drygajlo, “Addressing the Vulnerabilities of Likelihood-Ratio-Based Face Verification”, vol. 3546, pp. 1–19, 2005.

[6] M. Wagner and G. Chetty, “Liveness Assurance in Face Authentication”, pp. 908–916, 2009.

[7] http://en.wikipedia.org/wiki/Tablet_computer. Tablet computer.

[8] I. Naseem and A. Mian, “User Verification by Combining Speech and Face Biometrics in Video”, vol. 5359, pp. 482–492, 2008.

[9] G. Chetty, “Robust Audio Visual Biometric Person Authentication with Liveness Verification”, vol. 282, pp. 59–78, 2010.

[10] L. Sun, G. Pan, Z. Wu and S. Lao, “Blinking-Based Live Face Detection Using Conditional Random Fields”, vol. 4642, pp. 252–260, 2007.

[11] G. Pan, L. Sun, Z. Wu and S. Lao, “Eyeblink-based Anti-spoofing in Face Recognition from a Generic Webcamera”, in Computer Vision, 2007. ICCV 2007. IEEE 11th International Conference, 2007, pp. 1–8.

[12] J-W Li, “Eye Blink Detection Based on Multiple Gabor Response Waves”, in Machine Learning and Cybernetics, 2008 International Conference, 2008, pp. 2852–2856.

[13] J. Stern, Why do we blink? 1999 Available from: http://www.msnbc.msn.com/id/3076704/.

[14] K. Kollreider, H. Fronthaler and J. Bigun, “Evaluating Liveness by Face Images and the Structure Tensor”, in Automatic Identification Advanced Technologies, 2005. Fourth IEEE Workshop, 2005, pp. 75–80.

[15] G. Pan, L. Sun, Z. Wu and Y. Wang, “Monocular Camera-based Face Liveness Detection by Combining Eyeblink and Scene Context”, Telecommunication Systems, pp. 1–11, 2010.